Build Pipeline

I’m really happy how far we’ve come along with our build pipeline in the past months. We now have a truly multi-project, multi-platform, distributed build pipeline for our daily builds along with a common programming framework for build tools and a few C# GUI tools which simplify its usage and generally are more pleasing to the eye then a raw DOS shell window.

Let’s start with the multi-project aspect. At Radon Labs there are usually several projects in flight at the same time. All projects are based on Nebula (but not all are running on top of Nebula3, we may decide to start a project on the older Nebula2/Mangalore if it makes sense). We always had a standardized project structure, daily builds, and rules how a project’s build script looks like but we had to deal with a few detail problems which were often pushed into the future because there were more important problems to fix. One of the fairly critical problems was a proper toolkit version history and more flexible toolkit update process. In the past we only had one current toolkit version, which was updated through a patching process. Toolkit updates are very frequent, from about once a week to a few times per day. It may happen that a new toolkit version breaks file format compatibility with older version. That’s less regularly, maybe once every few months. But this is becoming a problem if a project decides to create an engine branch and thus is decoupled from engine development on the main branch. Makes sense if the project is going into beta and stability is more important then new engine features. Problem is, that the project may come to a point where the toolkit can no longer be updated with the latest version from the main branch, because the main branch introduced some incompatibility.

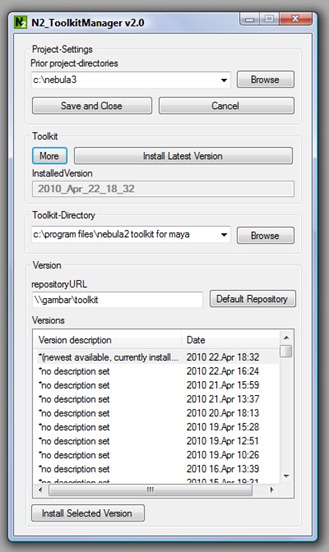

What’s needed is that the lead programmer may “pin” a specific toolkit version to his project. We solved this problem with a new “Toolkit Manager” tool which tracks a history of previous versions and which takes care that the latest, or the “right” toolkit version is installed:

When switching to a new project, the Toolkit Manager automatically installs the right toolkit version (only if necessary), but it’s also possible to manually select and install a specific toolkit version.

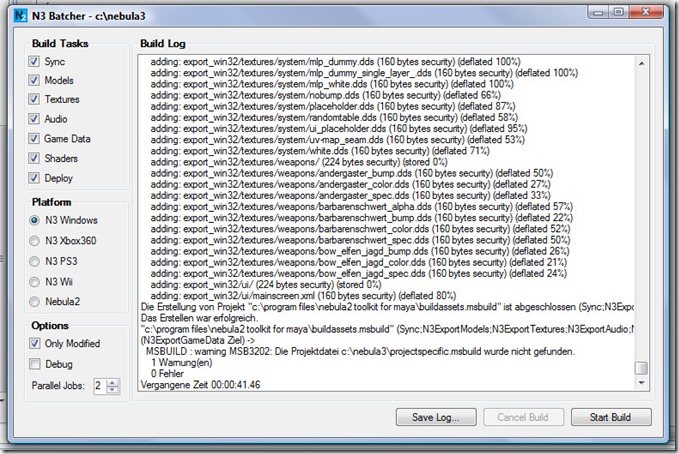

The multi-platform aspect of our build pipeline lets us create optimized data builds for the various platforms (currently Win32/D3D9, Xbox360, PS3, Wii and the legacy Nebula2 “software platform”) from the same assets with a single “flip of a switch”. From the outside the build system on a workplace machine is represented by a very simple front-end tool, the new “N3 Batcher”:

The UI is hopefully self-explanatory, except maybe for the “Sync” build task. This performs a data-sync with the latest daily build from the project’s build server before exporting locally modified data which saves quite a bit of time in large projects with many day-to-day changes.

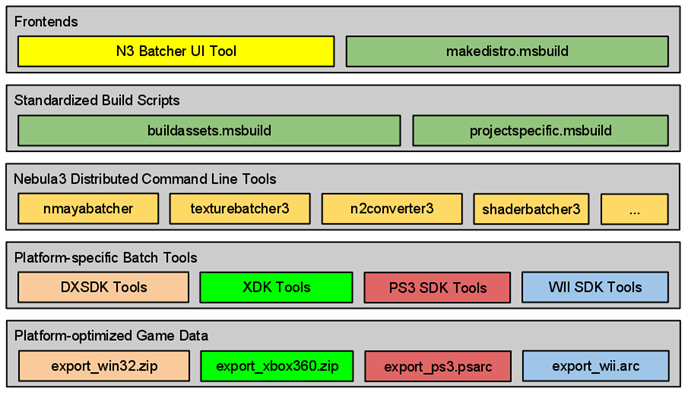

Under the hood the build system looks a bit more complex, but follows a clean layer model:

At the top there’s the “N3 Batcher” front-end tool for workplaces, and the “makedistro” MSBuild script for the master build server which provides the daily build.

Those 2 front-ends don’t do much more then calling a centralized “buildassets” MSBuild script which takes care of build steps that are identical for all projects. If project-specific build-steps are necessary they are defined in a projectspecific.msbuild script which is located in the project directory.

The build scripts split the build process into several build tasks which form a dependency tree. Build tasks mainly call the Nebula3 command line tools, which in turn are often just wrappers for platform specific build tools provided by the various platform SDKs. For instance, you can simply call N3’s texturebatcher3 tool with the “-platform xbox360” argument to convert textures for the Xbox360 platform, or with “-platform ps3” to convert textures into the PS3 format (provided the Xbox360 and PS3 SDKs are installed on the build machine – of course). Another important task of the N3 command line tools is that they distribute the build jobs across multiple cores, and multiple build machines (more on that below).

The main result of the build process are platform-specific archive files which contain all the build data for one project (the actual daily build process also compiles the executable, creates an installer, and optionally uploads the finished build to the publisher’s FTP server).

All exported data is neatly separated by platform into separate directories to enable incremental builds for different platforms on the same build machine.

Distributed Builds: For the daily build dogma, a complete build must be finished during a single night. In Drakensang we hit this 12-hour ceiling several times until we reached a point where we couldn’t improve build time by throwing faster hardware at the problem. Thus we decided that we need a distributed build system. Evaluating existing systems wasn’t very fruitful (mind you, this is not about distributing code compilation, but distributing the process of exporting graphics objects, textures and other data), thus we considered building our own system. The most important task was to create a good tools framework, which makes it easier to create distributed tools in the future. The result of this is the DistributedToolkitApp class, which does all the hard work (distributing build tasks across CPU cores and across several machines). Tools created with this class basically don’t need to care whether they run locally or distributed, where the input data comes from and where the output goes to. They only need to worry about the actual conversion job. Of course there’s a lot of necessary standardization underneath, for instance how a “build job” is exactly defined, and some restrictions about input and output data, but defining these standards and rules wasn’t much of a problem. What surprised me most was how many small problems showed up until the distributed build system was robust enough for a real-world project. I’ve been under the impression that a TCP/IP connection inside a LAN is a relatively fool-proof way to communicate. Well, it worked “most of the time”, but we also had a lot of over-night builds break because of mysterious connection issues until we built more fault-tolerance into the communication (like automatic re-connection, or putting “vanished” build slaves onto a black-list). Well, it works now, and its relatively simple to maintain such a build cluster.

PS: we really need to update our tools icons though…